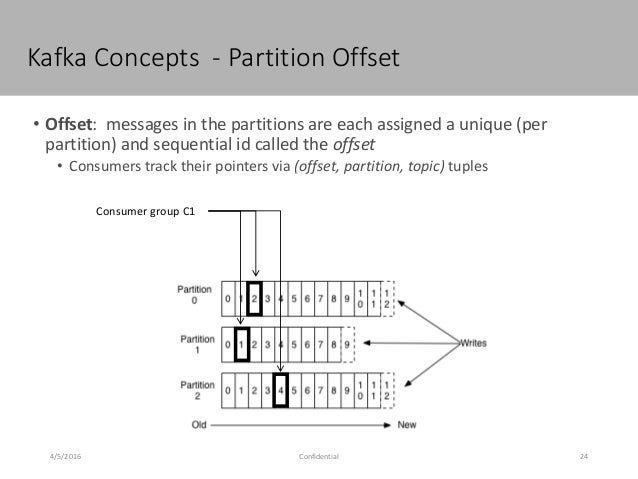

: This configuration helps to try committing offsets for the tasks.mit: If we will configure the value is true then the consumer offset will be committed in the background (The operation will be periodic in nature).None: if no previous offset was found, it would throw a consumer exceptionĪnything else: throw the exception to the consumer. Latest: It will automatically reset the latest offset offset.reset: This configuration property help to define when there is no initial offset in Kafka.Įarliest: It will automatically reset the earliest offset.If we keep the more indexing value, it will jump closer to the exact position. tes: We need to keep the index value larger.It will help for the faster log compaction and quick cache loads. : To do facilitate faster log compaction, we need to set less value.If we keep the higher value, then it will have a higher entirety of the data. : It will help to define the replication factor of the Kafka offsets topic.Please make sure that it will not change after deployment. : It will help to define the number of partitions for the offset commit topic.The value help of the compression codec for the Kafka offsets topic. : It will help to achieve the achieve “atomic” commits.With the help of the last commit and the retention period, the offset will expire. The retention period will be applicable for standalone consumers. Before getting discarded, the value will help to keep the offsets.

In other words, we can say that it will become empty. retention.minutes: When the consumer group lost all the consumers.: The frequency at which to check for stale offsets.It will be overridden if records are too large and continually coming at high frequency. It will load the offset into the volatile storage. : It will help to define the batch size for reading operation from the offsets segments.It will also be similar to the producer request timeout. In the second part, we can say that the timeout is reached. : The Kafka offset commit will be running slow or delayed until all the running replicas for the offsets topic receive the final commit.By default, the -1 value should not be overwriting. : Before doing any commit, it will require an acknowledgment.It will deal with the maximum size for metadata. : The value is associated with the Kafka offset commit..interval.ms: It will help set up the frequency so that the persistent data or record of log start Kafka offset.The log flusher will check if any log needs to be flushed to disk level or not. : It will help to set up the frequency ms.The last flush instance will act as a log recovery point in the Kafka offset. .ms: It will help set up the persistent record frequency.These features are mostly relevant for larger organizations where Kowl is used by hundreds of users.ĭocumentation can be found here: cloudhut. We do have a business version which offers additional features like audit logging in Kowl, Authentication & Authorization around Kowl etc. Support is also available via Discord or GitHub :-).

Last but not least: It's open source and therefore it does not have any restrictions like a user limitation, or a web version which has to be paid for.

It is also lightweight (written in Go and React) and easy to run (docker container without dependencies). When deserializing messages you don't have to select Codecs, it automatically detects the appropriate codec! The UI does not overwhelm you with a lot of information, it has a proper JSON viewer (Protobuf, Avro, XML messages are rendered as JSON as well). I'm one of the authors of Kowl ( /cloudhut/kowl) and compared to the listed solution I think it stands out by it's superior UI/UX.

#Kafka offset explorer free

Kowl - Free & Open source WebUI for Kafka

0 kommentar(er)

0 kommentar(er)